STCLOUD_mod7_storage FULL

for public/STCLOUD_mod7_storage FULL

check 20250525 STCLOUD Storage FULL

Module 7: Storage

Core AWS Services RECAP

- Networks

- Compute

- Database

- Directory Services

there are many services out there. public cloud would need to support various use cases and organizations. don't be afraid of using cloud, chances are there is a service that will help you.

cloud is powerful because it has lots of options.

- Amazon Virtual Private Cloud (VPC) - Networks

- Amazon EC2 - Compute

- VMs → highest amount of control, and responsibility, hardware virtualization

- Containers → OS virtualizations, lightweight, focused on software applications and deployment of applications

- Serverless → kind of like PaaS, focused on your own code nalang (don't think about infra na), API calls, cheapest option (you only pay for every time you do an API call)

- PaaS/Orchestration → focus on code/application/website don't think about infra anymore, deploys resources

- Storage

- Amazon S3 → object storage like GDrive

- great for static files (images, videos, things that don't change)

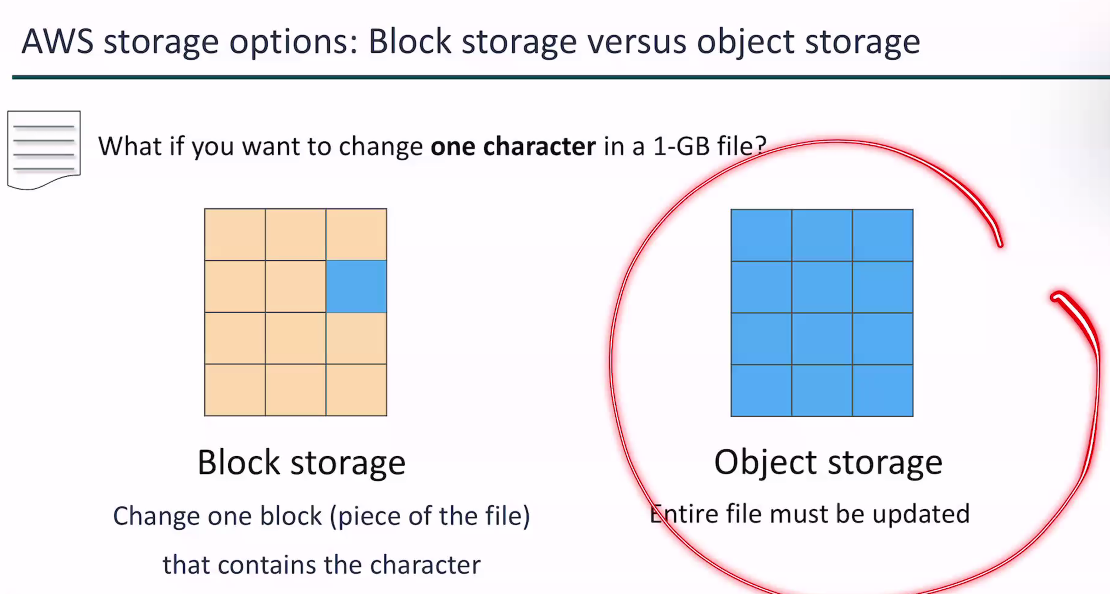

- each file is a full object, if you have to update something you need to upload a full new copy

- Amazon EBS → block storage

- each file is chopped into blocks, for example each block is 512kb. if you have a 2mb file, it will consume 4 blocks.

- great for changing files

- VM/CT

- HDD/SSD

- has OS

- Example:

- Amazon EFS → Network File System (NFS)

- great for high-scaling storage, file sharing with multiple devices

- non-root disk (not the one holding the OS)

- Example:

- Amazon S3 Glacier -> Archival Storage

- mostly for archives, long-term storage

- cheapest price per GB

- not instant retrieval (takes a few mins to a few hours) like cassette

- sample use cases would be logs (ex. 1-2 years after a student graduates, they put their info in archival)

- Amazon S3 → object storage like GDrive

- Database

- Amazon Relational Database Service (Amazon RDS) → managed SQL DB

- managed service (focus on your data, the cloud manages the DB for your)

- SQL-type

- if you know how to manage your own DB, you can just go for compute

- good for vertical scaling (many entries, same columns)

- Amazon DynamoDB → non-SQL service

- think MongoDB, XML, JSON, key-value pairs

- good for horizontal scaling (many columns, entries have different key-value pairs)

- Amazon RedShift → Data Warehousing

- normally for big data, large volume

- analytics

- Amazon Aurora → Enterprise SQL

- SQL

- high performance

- clustering

- aggregation

- Amazon Relational Database Service (Amazon RDS) → managed SQL DB

- AWS Identity and Access Management → Directory Services

- users, group, role, policies

- authentication and identification

AWS Storage Options: Block Storage vs. Object Storage

38:50

- Block storage → change one piece of the file

- object storage → everything has to be updated, object is for static files like in GDrive

this is where the recording for july 29 starts

Amazon EBS (Elastic? Block Storage)

- enables you to create individual storage volumes and attach them to an Amazon EC2 Instance

- Amazon EBS offers block-level storage

- volumes are automatically replicated within its Availability Zone

- can be backed up automatically to Amazon S3 (object storage) through Snapshots

- uses:

- boot volumes and storage for Amazon Elastic Compute Cloud (Amazon EC2) instances (VMs)

- data storage with a file system (NTFS, exFAT, FAT32)

- database hosts

- Enterprise applications

Amazon EBS Volume Types and Use Cases

| Type of Storage | Solid State Drives (SSD) | Hard Disk Drives (HDD) | ||

|---|---|---|---|---|

| Volume Types | General Purpose | Provisioned IOPS | Throughput Optimized | Cold |

| Max Volume Size | 16TiB | 16TiB | 16TiB | 16TiB |

| Max IOPS/Volume | 16,000 | 64,000 | 500 | 250 |

| Max Throughput/Volume | 250 MiB/s | 1,000 MiB/s | 500 MiB/s | 250 MiB/s |

| Solid State Drives (SSD) | Also SSD | Hard Disk Drives (HDD) | Also HDD |

|---|---|---|---|

| General Purpose | Provisioned IOPS | Throughput Optimized | Cold |

| recommended for most workloads | critical business applications that require sustained IOPS performance, or more than 16k IOPS or 250 MiB/s of throughput per vol | streaming workloads that require consistent, fast, throughput at a low price | throughput-oriented storage for large volumes of data that is infrequently accessed |

| system boot volumes, has OS | large database workloads | big data | for scenarios where the lowest storage cost is important |

| virtual desktops | more for enterprise na | data warehouses | cannot be a boot volume, No OS |

| low latency interactive applications | high data transfer | log processing | |

| development and test environments | - | cannot be a boot volume, No OS | - |

- boot volumes can only be SSD

Amazon EBS Features

- Snapshots - point-in-time snapshots, recreate a new volume at any time, like Time Machine or a save state

- Encryption - encrypted Amazon EBS Volumes, no additional cost

- expect that this may consume a bit more space

- may be a bit slower when you have encryption on

- there's no additional cost because it's your responsibility

- Elasticity - increase capacity, change to different types

- scale up

regarding...

data in rest → customer is responsible

data in transit → customer is responsible

- encryption is a feature and a choice to activate

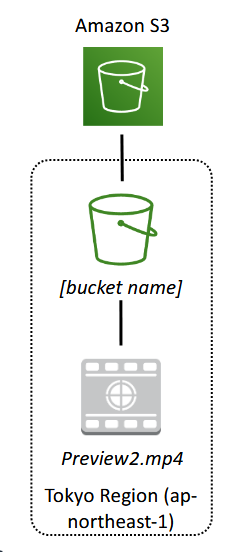

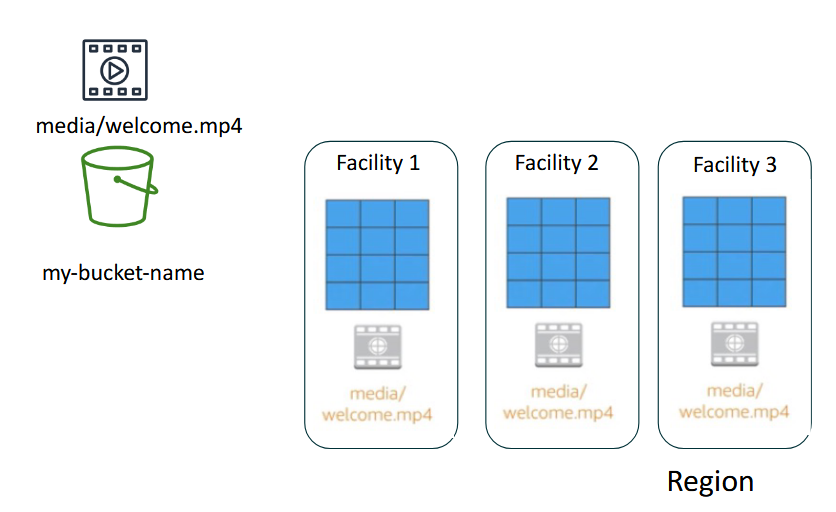

Amazon Simple Storage Service (Amazon S3) - Object Storage

- data is stored as objects in buckets

- virtually unlimited storage, and a single object is limited to 5TB

- designed for 11 9s of durability

- 99.999999999% annual durability basically means for every 10,000,000 objects stored, you can (on average), expect to lose a single object every 10,000 years. (99.99....% chance your data will NOT be lost)

- basically, data loss due to the cloud provider's infrastructure is a non concern because the chance is so low. it's more likely you'll lose a file to user error, application bugs, or malicious attacks rather than the underlying cloud storage failing.

- granular access to bucket and objects

Amazon S3 (object storage) Classes

Amazon S3 offers a range of object-level storage classes that are designed for different use cases:

- Standard → kind of like Google Drive

- Intelligent-Tiering

- Standard-Infrequent Access (Amazon S3 Standard-IA)

- One Zone-Infrequent Access (Amazon S3 One Zone-IA)

- Glacier

- Deep Archive

(as you move down it becomes cheaper but also becomes slower)

what's happening here is that the longer you don't use a file, the lower it goes

infrequent access: you don't really use it as much

one zone: instead of all availability zones

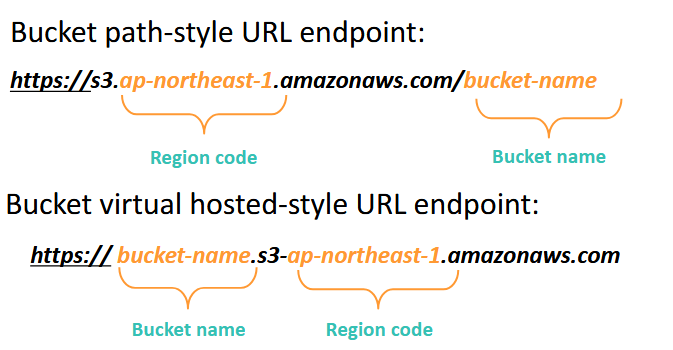

Amazon S3 (object storage) bucket URLs (2 styles)

to upload your data

- create a bucket in an AWS region

- upload almost any number of objects to the bucket

Data is redundantly stored in the region

- high durability: if one fails, then you can just go to the other one that has a copy

- backups, redundancy

Access the data anywhere

because it's SaaS, it's connected to the internet

- on AWS Management console (web gui)

- AWS command line interface (CLI)

- SDK

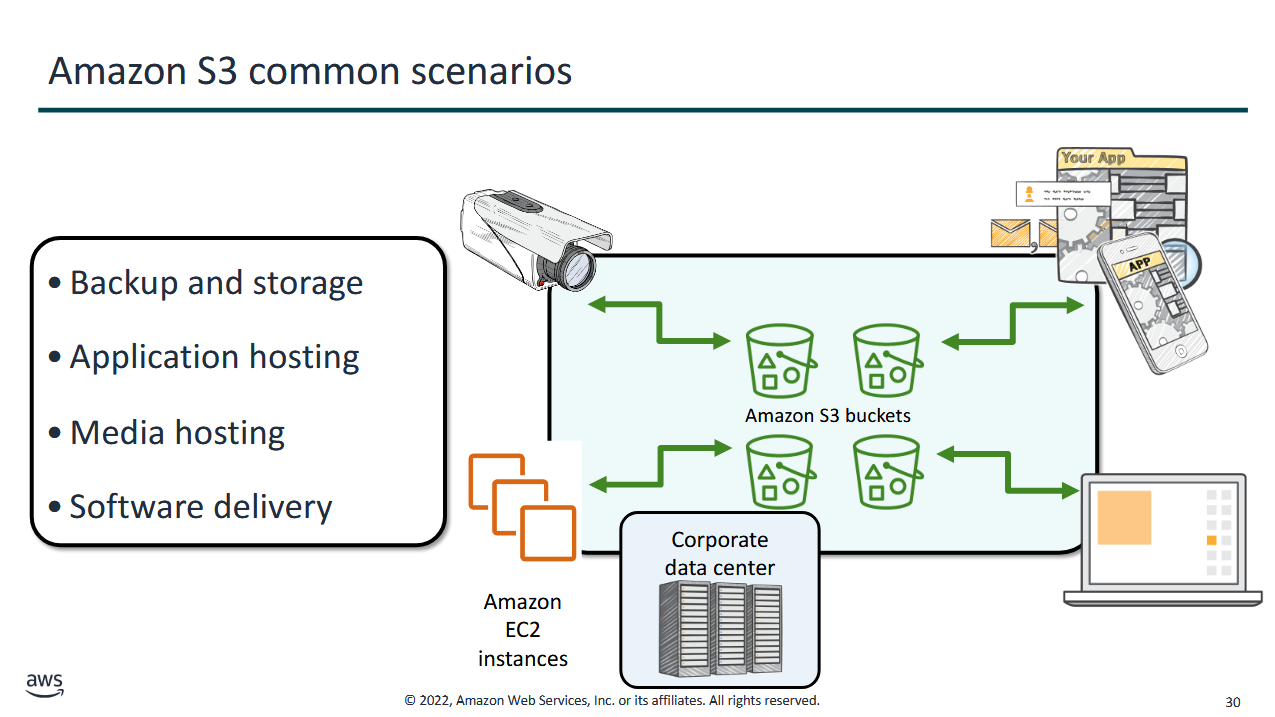

Common Use Cases and Scenarios

use cases

- storing application assets

- static web hosting

- backup and disaster recovery (DR)

- staging area for big data

- and so on...

mostly static data

- CCTV Footage

- application assets

- documents in the cloud

Amazon S3 Storage Pricing

to estimate the costs, consider the following:

- Storage class type

- Standard storage is designed for 11 9s of durability, 4 9s of availability (99.99% available, 100%=365 days, 90% availability means 36.5 days of failure, 99% means 3.6 days fail, 99.9% means 0.36 of a day, 99.99% downtime is 50 min down/yr)

- S3 Standard-Infrequent Access (S-IA) is designed for: 11 9s of durability, 3 9s of availability

- Amount of storage

- the number and size of objects

- Requests

- the number and type of requests (GET/PUT/COPY)

- type of requests: different rates for GET requests than other requests

- when you request, you also do a data transfer

- Data Transfer

- pricing is based on the amount of data that is transferred out of the Amazon S3 Region

- data transfer is free, but you incur charges for data that is transferred out

- pricing is based on the amount of data that is transferred out of the Amazon S3 Region

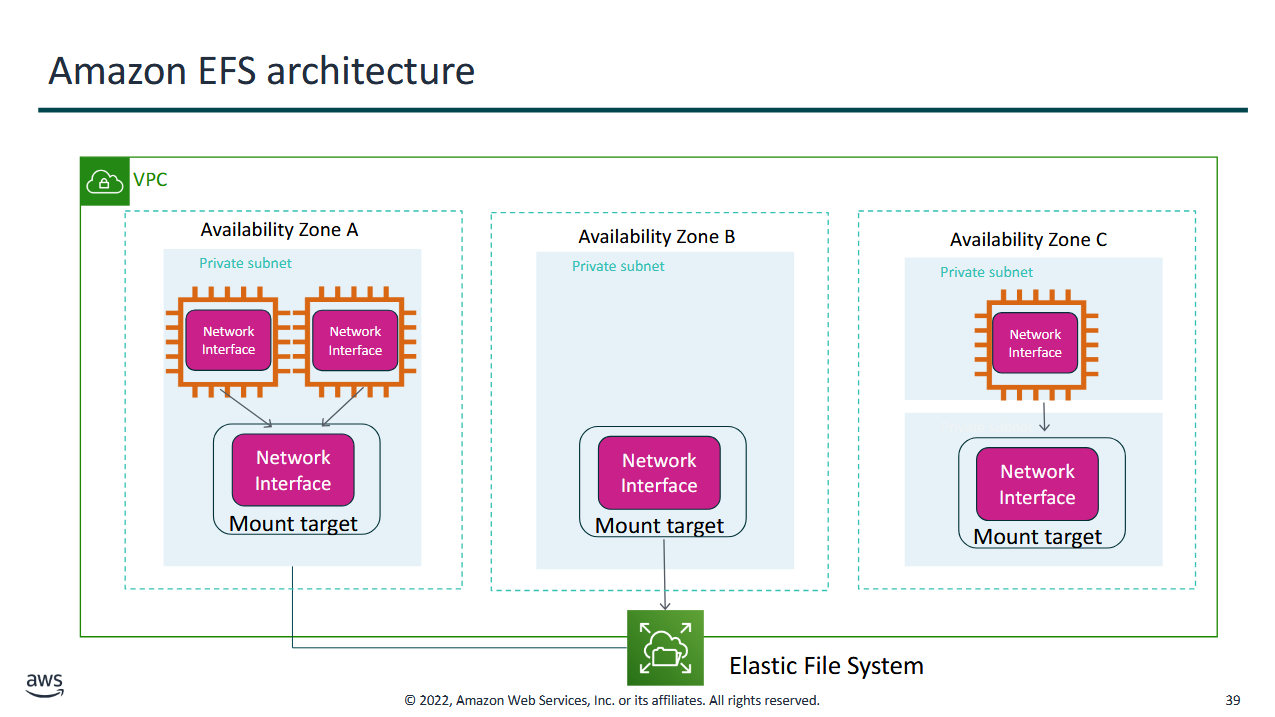

Amazon Elastic File System (Amazon EFS)

- file storage in the AWS cloud

- works well for big data and analytics, media processing workflows, content management, web serving, and home directories

- petabyte-scale, low-latency file system

- shared storage ← main purpose of using NFS

- elastic capacity → able to expand

- supports Network File System (NFS) ver 4.0 and 4.1 (NFSv4)

- NFS is good for file sharing or VMs with load balancing

- compatible with all Linux-based AMIs for Amazon EC2

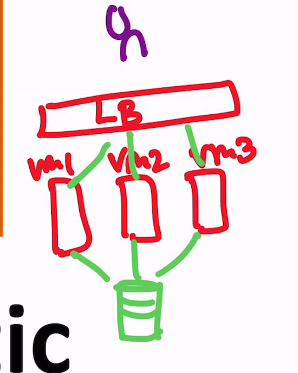

Amazon EFS architecture

the VMs just mount onto the file system

Amazon S3 Glacier (Archival)

representative of magnetic tape archival, long-term cold storage, and non-instant data retrieval

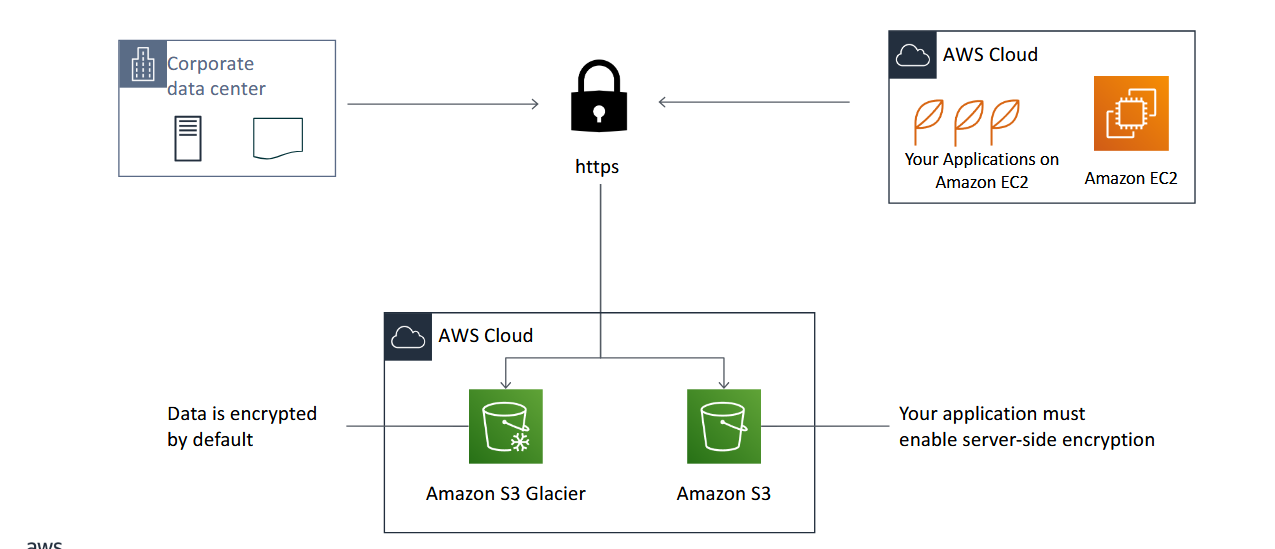

- is a data archiving service that is designed for security, durability, and an extremely low cost

- Amazon S3 Glacier is designed to provide 11 9s of durability for objects (likelihood for data loss is low to 0)

- supports the encryption of data in transit and at rest through Secure Sockets Layer (SSL) or Transport Layer Security (TLS)

- the Vault Lock feature enforces compliance through a policy

- extremely low-cost design works well for long-term archiving

- provides 3 options for access to archives - expedited, standard, and bulk

- retrieval times range from a few minutes to several hours

- storage service for low-cost data archiving and long-term backup

- you can configure lifecycle archiving of Amazon S3 content to Amazon S3 Glacier

- retrieval options

- standard: 3-5 hours

- bulk: 5-12 hours

- expedited: 1-5 min

Amazon S3 Glacier Use Cases

- media asset archiving

- healthcare information archiving

- regulatory and compliance archiving

- scientific data archiving

- digital preservation

- magnetic tape replacement

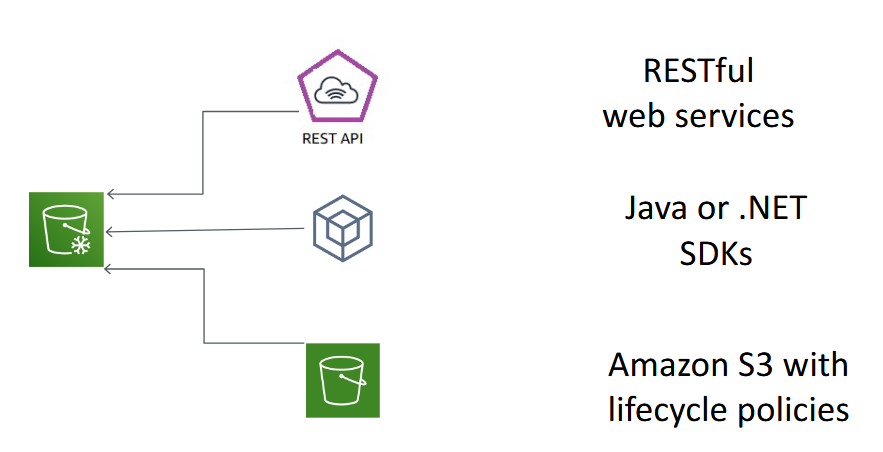

Using Amazon S3 Glacier

- you can move buckets to glacier

- you can move SDKs to make it programmable

- also use REST APIs

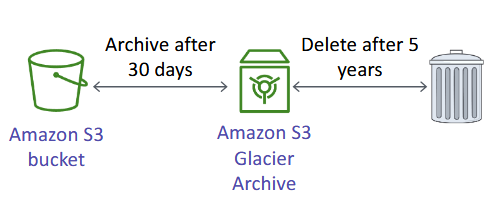

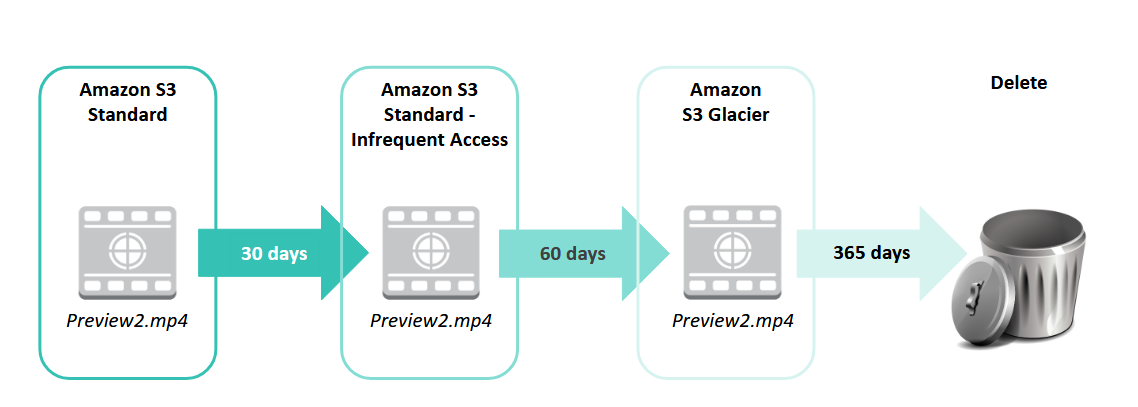

Amazon S3 Glacier Lifecycle Policies

- Amazon S3 lifecycle policies enable you to delete or move objects based on age

- it can be in standard, then can move to infrequent access, then glacier, etc.

- you can configure it to be deleted

- lifecycle → data from its birth to its death based on the policy you set up

Comparison: Amazon S3 vs Glacier

- both are object storages

- if you're doing a lot of upload and download don't put it in Glacier

| Amazon S3 | Amazon S3 Glacier | |

|---|---|---|

| Data Volume | no limit | no limit |

| Average Latency | ms | mins/hours |

| Item Size | 5TB max | 40 TB max |

| cost/GB per mon | higher | lower |

| billed requests | PUT, COPY, POST, LIST, GET | UPLOAD and retrieval (note you can't get the file immediately, moves it to S3 first) |

| retrieval pricing | ¢ per request | ¢¢ per request and per GB |

Server-side encryption for Amazon S3 and Glacier

- application must enable encryption

Security with Amazon S3 Glacier

how you secure your storages

- control access with IAM

- Amazon S3 Glacier encrypts your data with AES-256

- Amazon S3 Glacier manages your keys for you